Beyond chatbots: 3 alternative UXs for building LLM-based features

Chatbots are typically seen as the go-to AI implementation, which makes sense: they offer an intuitive, conversational user experience. But incorporating a chatbot into your product isn’t the only (or even the most impactful) way to use AI to address user pain points.

Because chat interfaces are so open-ended, the user is required to take the initiative in figuring out how and what they can ask of AI. Additionally, explaining what you need in a back-and-forth chat messaging format also takes more time than, for example, clicking a button or toggle in a traditional UI.

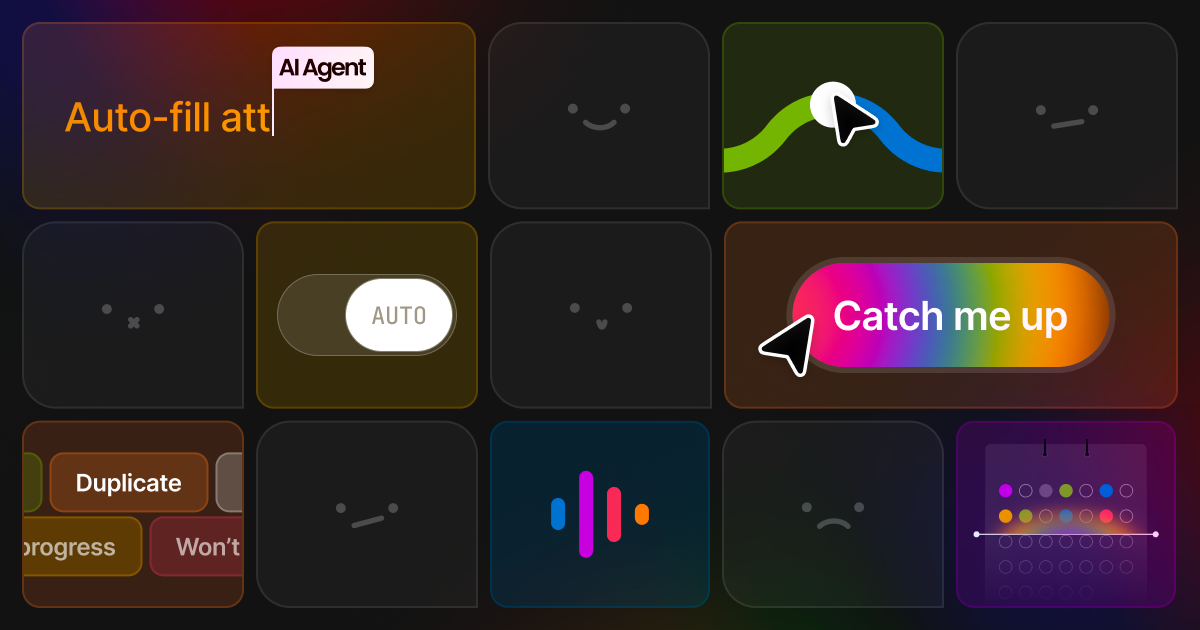

As LLMs’ capabilities continue to expand, so too can the array of UX options we have for AI-backed features. Today we'll share three alternative UXs that don’t rely on a back-and-forth chatbot interface.

3 ways to create new user experiences through LLM-based features (beyond chatbots)

1. Scheduled, reviewable content digests

From drafting emails and summarizing articles, to writing content, LLM text generation use cases seem endless. However, text generation is extremely reliant on specific, contextual information, which can require substantial work to retrieve and send. The detailed, or personal information needed for those queries isn’t necessarily available to be pulled from the web — instead, users have to manually pull the relevant data needed for personalized text generation to be effective.

Think of asking an LLM to write a standup update for you: without detailed context on what you’re working on, it’ll only be able to generate hypothetical updates that someone in your role might have, not an actual update to share with your team.

For scenarios like this, consider an alternative where you pass an LLM user-generated content (like messages and activity logs), allowing it to analyze and generate custom outputs. The content given to the LLM should adhere to specific criteria you set on the backend, e.g. within last 7 days, only tasks that weren't marked as won't do, etc.

By giving the LLM specific data like task progress, chat messages, and status updates to process, it can draft structured messages for specific users. From sending that contextual data, the full flow would follow these steps:

- Analyzing user-generated content and activity on a subset of objects within a specific time period,

- Compiling multi-object inputs to feed its own drafts, and

- Formatting content output for the specific user who’ll be reviewing the draft — these outputs should be easy to scan and digest quickly, so formatting includes headings, bullet points, and bolded information where applicable

The trigger can be a user-specified time, which works well for any scheduled or regular process, like weekly billing reports and quarterly ARR or sales pipeline updates. To return to our example of a standup update, when given context of an individual's activity logs, the LLM can draft a well-formatted digest of any relevant past, current, and upcoming work for a human to review and use. This UX of scheduled information digests works especially well for content needed on a regular basis, like reports or updates shared frequently.

These types of AI-based features could be further enhanced by also sending the LLM steady-state knowledge: long-term information like team KPIs or roles within a project.

2. Object attribute updates

Manually updating data is often painful. As your database grows and recording updates slips through the cracks, information becomes outdated, resulting in miscommunications and even redundant work.

Luckily, LLMs are well-suited to parsing through updates from users, like actions or messages, to keep data up-to-date in real time: changing the status of a cell, or applying and removing relevant tags over time.

Whether at initial object creation or at subsequent events of object modification, LLMs can process user actions and match them with available attribute options, and then apply relevant attribute values to the object (e.g. Impact: Broken feature; Risk Level: High).

For example, the LLM can:

- Analyze user-generated content inside the object,

- Review the available list of attributes for this object’s class, and

- Identify and select applicable attribute values for the object, one by one.

For this implementation, the trigger would be an object activity event, like a task, or sales opportunity being created or edited. The data segmentation step involves identifying and sending the relevant context from the object to the LLM.

With this context, the LLM analyzes what's been provided, assesses available attribute options, and uses its reasoning and prompting to apply the appropriate value for the user. In our database example, an LLM could assign the right priority level to a newly filed bug or update the risk level of a sales opportunity, all based on available context data..

Of course, people will want different default behavior for attribute updates, depending on their own company's processes, so to ensure this UX works well for any type of customer, providing customization options is important. This can include allowing them to customize how attributes are updated, which attributes are tracked, or when updates should be triggered. A good UX should also make what’s happening is understandable to people by putting visual prominence on or highlighting the field being changed (like a cursor tracking actions). Other ideas to enhance visibility UX include slowing down or staggering changes, or creating a reviewable log of all changes made by AI.

3. Real-time text editing in a back-and-forth context

Though chatbots rely on direct, 1:1 conversations between users and AI, LLMs can also observe human interactions to discover helpful supportive actions to take. In fact, they’re well-positioned to handle real-time interactive text editing and generation in existing documents by analyzing user conversations and other data.

Like a stenographer, LLMs can observe discussions between multiple people, then synthesize the information to extract decisions and key points into a single, always-up-to-date, source of truth document.

For example, the LLM can:

- Assess the structure and content of an existing document,

- Analyze the stream of user-generated content and conversation in natural language,

- Extract the most valuable details for documentation,

- Generate the necessary HTML actions to edit the document accordingly, and

- Format differences for users to review and approve (or reject).

For this implementation, the trigger is new information being shared about a piece of work, like meeting notes from a product review, a transcript from a sales call, or back-and-forth messaging about the direction of a blog post. The data segmentation here will involve providing a reference object that the LLM can use to cross-reference new information against, like a product brief, campaign plan, or bug report. The LLM will analyze the new information that's been provided and identify whether it contradicts or expands the existing content. Based on that reasoning, the LLM will determine if edits are required, where to make them, and update the document accordingly — for example, adding scope details to a product brief after user feedback was received.

To ensure the best user experience when building out this LLM framework, consider that all changes made by AI in documents should be clearly visible — or even require a quick review by a human to confirm accuracy. The more the AI edits feel like a human is doing them, the better. Instead of text popping in all at once, build in small UX details like pauses or backspaces to mimic how we naturally type. Longer changes especially should occur piece by piece so the experience isn’t jarring for users. And similarly, you’ll want to prompt the LLM to mirror the way users are already writing or interacting. For example, if the rest of the document is broken down into concise bullet points, the LLM should deliver information in the same style, versus dropping in an entire paragraph instead.

One way to do this is to include a cursor that moves, disappears, and reappears. This makes it easier to follow what the AI feature is doing, and lets users feel more aware of all the changes made. Instead of flashy effects or jumping text, focus on small movements that feel like an organic part of the workflow.

Additionally, humans still want final control over documents and text, so you should make it easy to undo, preview, or reject changes. This helps users feel like AI is helping and assisting, rather than interrupting or overpowering their work.

In the future, AI will operate more and more often behind the scenes, without requiring users to message with a chatbot or explicitly request AI's assistance. This shift from chatbots to other implementations transforms AI from a tool users must manage themselves into an intuitive assistant, able to handle tasks and make suggestions autonomously based on context and past user behavior. Ultimately, this will free users to focus on their core tasks feeling supported by AI, instead of having to manage the AI’s functionality directly.